Knn algorithm pdf Hawkes Bay

Improved kNN Algorithm by Optimizing Cross-validation mentioned above, an improved KNN text classification algorithm based on clustering center is proposed in this paper. Firstly, the given training sets are compressed and the samples near by the border are deleted, so the multi-peak effect of the training sample sets is eliminated. Secondly, the training sample sets of each category are

k Nearest Neighbors algorithm (kNN) PDF Free Download (378

Implementing Your Own k-Nearest Neighbor Algorithm Using. g The K Nearest Neighbor Rule (k-NNR) is a very intuitive method that classifies unlabeled examples based on their similarity with examples in the training set n For a given unlabeled example xu∈ℜD, find the k “closest” labeled examples in the training data set and assign xu to the class that appears most frequently within the k-subset, mentioned above, an improved KNN text classification algorithm based on clustering center is proposed in this paper. Firstly, the given training sets are compressed and the samples near by the border are deleted, so the multi-peak effect of the training sample sets is eliminated. Secondly, the training sample sets of each category are.

As a simple, effective and nonparametric classification method, kNN algorithm is widely used in text classification. However, there is an obvious problem: when the density of training data is kNN algorithm depends on the distance function and the value of k nearest neighbor. Traditional kNN algorithm can select best value of k using cross-validation but there is unnecessary processing of the dataset for all possible values of k. Proposed kNN algorithm is an optimized form of traditional kNN by

the a priori algorithm, attack this problem cleverly, as we shall see when we cover association rule mining in Chapter 10. Most data mining methods are supervised methods, however, meaning that (1) there is a particular prespecified target variable, and (2) the algorithm is given many PDF This paper proposes a new k Nearest Neighbor (kNN) algorithm based on sparse learning, so as to overcome the drawbacks of the previous kNN algorithm, such as the fixed k value for each test

12/21/2017 · How kNN algorithm works - Duration: 4:42. Thales Sehn Körting 501,022 views. 4:42. بالعربي Artificial Neural Networks (ANNs) Introduction + Step By Step Training Example - Duration: 25:56. 1/2/2017 · K-Nearest neighbor algorithm implement in R Programming from scratch In the introduction to k-nearest-neighbor algorithm article, we have learned the core concepts of the knn algorithm. Also learned about the applications using knn algorithm to solve the real world problems. In this post, we will be implementing K-Nearest Neighbor Algorithm on a dummy data set+ Read More

enhancing the performance of K-Nearest Neighbor is proposed which uses robust neighbors in training data. This new classification method is called Modified K-Nearest Neighbor, MKNN. Inspired the traditional KNN algorithm, the main idea is classifying the test samples according to their neighbor tags. 1/2/2017 · K-Nearest neighbor algorithm implement in R Programming from scratch In the introduction to k-nearest-neighbor algorithm article, we have learned the core concepts of the knn algorithm. Also learned about the applications using knn algorithm to solve the real world problems. In this post, we will be implementing K-Nearest Neighbor Algorithm on a dummy data set+ Read More

7/25/2016 · Figure 1 plots the distribution of X 0 values in absence of missingness and after imputation with k = 1, 3 or 10 neighbors in an additional experiment of 100 imputation runs in samples of size n = 400, MCAR = 30 % in the context of the plain framework with the kNN algorithm. An Improved k-Nearest Neighbor Classification Using Genetic Algorithm N. Suguna1, and Dr. K. Thanushkodi2 Nearest Neighbor (KNN) algorithm called as Genetic KNN (GKNN), to overcome the limitations of traditional KNN. In traditional KNN algorithm, initially the distance between all

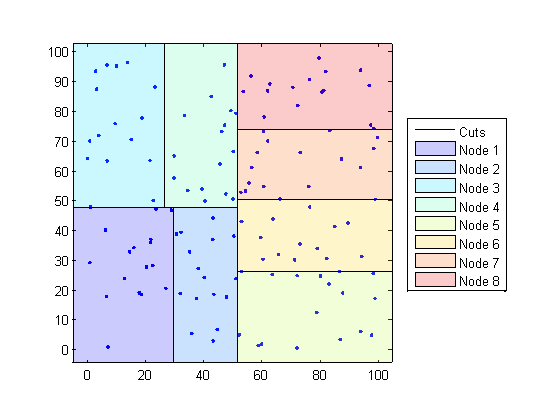

mentioned above, an improved KNN text classification algorithm based on clustering center is proposed in this paper. Firstly, the given training sets are compressed and the samples near by the border are deleted, so the multi-peak effect of the training sample sets is eliminated. Secondly, the training sample sets of each category are k-Nearest Neighbor Algorithms for Classification and Prediction 1 1 k-Nearest Neighbor Classification The idea behind the k-Nearest Neighbor algorithm is to build a classification probability density functions for each class. In other words if we have a large

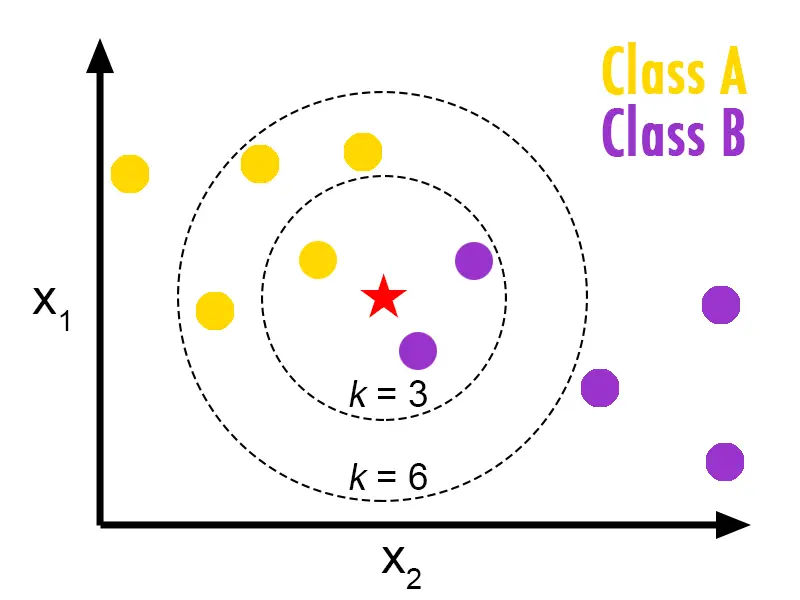

7/25/2016 · Figure 1 plots the distribution of X 0 values in absence of missingness and after imputation with k = 1, 3 or 10 neighbors in an additional experiment of 100 imputation runs in samples of size n = 400, MCAR = 30 % in the context of the plain framework with the kNN algorithm. The parameters of the algorithm are the number k of neighbours and the procedure for combining the predictions of the k examples The value of k has to be adjusted (crossvalidation) We can over t (k too low) We can under t (k too high) Javier B ejar (LSI - FIB) K-nearest neighbours Term 2012/2013 10 / 23.

5/22/2015 · A presentation on KNN Algorithm. Slideshare uses cookies to improve functionality and performance, and to provide you with relevant advertising. If you continue browsing the site, you agree to the use of cookies on this website. In pattern recognition, the k-nearest neighbors algorithm (k-NN) is a non-parametric method used for classification and regression. In both cases, the input consists of the k closest training examples in the feature space.The output depends on whether k-NN is used for classification or regression: . In k-NN classification, the output is a class membership.

kNN algorithm depends on the distance function and the value of k nearest neighbor. Traditional kNN algorithm can select best value of k using cross-validation but there is unnecessary processing of the dataset for all possible values of k. Proposed kNN algorithm is an optimized form of traditional kNN by In pattern recognition, the k-nearest neighbors algorithm (k-NN) is a non-parametric method used for classification and regression. In both cases, the input consists of the k closest training examples in the feature space.The output depends on whether k-NN is used for classification or regression: . In k-NN classification, the output is a class membership.

Firstly, as clipping-KNN, the paper applies an improved KNN sample points, image objects are obtained through image algorithm in the classification of image objects obtained segmentation. Secondly, original KNN, clipping-KNN by segmentation. 1/2/2017 · K-Nearest neighbor algorithm implement in R Programming from scratch In the introduction to k-nearest-neighbor algorithm article, we have learned the core concepts of the knn algorithm. Also learned about the applications using knn algorithm to solve the real world problems. In this post, we will be implementing K-Nearest Neighbor Algorithm on a dummy data set+ Read More

Improved kNN Algorithm by Optimizing Cross-validation

(PDF) kNN Algorithm with Data-Driven k Value. This section will provide a brief background on the k-Nearest Neighbors algorithm that we will implement in this tutorial and the Abalone dataset to which we will apply it. k-Nearest Neighbors. The k-Nearest Neighbors algorithm or KNN for short is a very simple technique. The entire training dataset is stored., Firstly, as clipping-KNN, the paper applies an improved KNN sample points, image objects are obtained through image algorithm in the classification of image objects obtained segmentation. Secondly, original KNN, clipping-KNN by segmentation..

KNN in Machine learning ШЁШ§Щ„Ш№Ш±ШЁЩЉ YouTube. 3/12/2018 · The k-Nearest-Neighbors (kNN) method of classification is one of the simplest methods in machine learning, and is a great way to introduce yourself to machine learning and classification in general. At its most basic level, it is essentially classification by finding the most similar data points in the training data, and making an educated guess based on their classifications., Firstly, as clipping-KNN, the paper applies an improved KNN sample points, image objects are obtained through image algorithm in the classification of image objects obtained segmentation. Secondly, original KNN, clipping-KNN by segmentation..

K Nearest Neighbor Algorithm Department of Computer

K Nearest Neighbor Algorithm Department of Computer. 12/1/2012 · In pattern recognition, the K-Nearest Neighbor algorithm (KNN) is a method for classifying objects based on the closest training examples in the feature space. KNN is a type of instance-based learning, or lazy learning where the function is only approximated locally and all computation is deferred until classification. https://en.wikipedia.org/wiki/KNN 12/1/2012 · In pattern recognition, the K-Nearest Neighbor algorithm (KNN) is a method for classifying objects based on the closest training examples in the feature space. KNN is a type of instance-based learning, or lazy learning where the function is only approximated locally and all computation is deferred until classification..

K nearest neighbors is a simple algorithm that stores all available cases and classifies new cases based on a similarity measure (e.g., distance functions). KNN has been used in statistical estimation and pattern recognition already in the beginning of 1970’s as a non-parametric technique. Algorithm In this section we review the concepts like KNN, Genetic algorithm and heart disease. 2.1. K nearest neighbor classifier K nearest neighbor(KNN) is a simple algorithm, which stores all cases and classify new cases based on similarity measure.KNN algorithm also called as 1) case based reasoning 2) k nearest neighbor 3)example based reasoning 4)

1/24/2018 · Overview of one of the simplest algorithms used in machine learning the K-Nearest Neighbors (KNN) algorithm, a step by step implementation of KNN algorithm in Python in creating a trading strategy using data & classifying new data points based on a similarity measures. An Improved k-Nearest Neighbor Classification Using Genetic Algorithm N. Suguna1, and Dr. K. Thanushkodi2 Nearest Neighbor (KNN) algorithm called as Genetic KNN (GKNN), to overcome the limitations of traditional KNN. In traditional KNN algorithm, initially the distance between all

In pattern recognition, the k-nearest neighbors algorithm (k-NN) is a non-parametric method used for classification and regression. In both cases, the input consists of the k closest training examples in the feature space.The output depends on whether k-NN is used for classification or regression: . In k-NN classification, the output is a class membership. 12/1/2012 · In pattern recognition, the K-Nearest Neighbor algorithm (KNN) is a method for classifying objects based on the closest training examples in the feature space. KNN is a type of instance-based learning, or lazy learning where the function is only approximated locally and all computation is deferred until classification.

g The K Nearest Neighbor Rule (k-NNR) is a very intuitive method that classifies unlabeled examples based on their similarity with examples in the training set n For a given unlabeled example xu∈ℜD, find the k “closest” labeled examples in the training data set and assign xu to the class that appears most frequently within the k-subset 3/26/2018 · KNN algorithm can also be used for regression problems. The only difference from the discussed methodology will be using averages of nearest neighbors rather than voting from nearest neighbors. KNN can be coded in a single line on R. I am yet to …

3/8/2016 · kNN, k Nearest Neighbors Machine Learning Algorithm tutorial. Follow this link for an entire Intro course on Machine Learning using R, did I mention it's FRE... How does the KNN algorithm work? In KNN, K is the number of nearest neighbors. The number of neighbors is the core deciding factor. K is generally an odd number if the number of classes is 2. When K=1, then the algorithm is known as the nearest neighbor algorithm. This is the simplest case. Suppose P1 is the point, for which label needs to predict.

1/2/2017 · K-Nearest neighbor algorithm implement in R Programming from scratch In the introduction to k-nearest-neighbor algorithm article, we have learned the core concepts of the knn algorithm. Also learned about the applications using knn algorithm to solve the real world problems. In this post, we will be implementing K-Nearest Neighbor Algorithm on a dummy data set+ Read More k-Nearest Neighbor Algorithms for Classification and Prediction 1 1 k-Nearest Neighbor Classification The idea behind the k-Nearest Neighbor algorithm is to build a classification probability density functions for each class. In other words if we have a large

g The K Nearest Neighbor Rule (k-NNR) is a very intuitive method that classifies unlabeled examples based on their similarity with examples in the training set n For a given unlabeled example xu∈ℜD, find the k “closest” labeled examples in the training data set and assign xu to the class that appears most frequently within the k-subset 1/24/2018 · Overview of one of the simplest algorithms used in machine learning the K-Nearest Neighbors (KNN) algorithm, a step by step implementation of KNN algorithm in Python in creating a trading strategy using data & classifying new data points based on a similarity measures.

4/11/2017 · KNN Algorithm is based on feature similarity: How closely out-of-sample features resemble our training set determines how we classify a given data point: Example of k-NN classification. The test sample (inside circle) should be classified either to the first … This section will provide a brief background on the k-Nearest Neighbors algorithm that we will implement in this tutorial and the Abalone dataset to which we will apply it. k-Nearest Neighbors. The k-Nearest Neighbors algorithm or KNN for short is a very simple technique. The entire training dataset is stored.

In pattern recognition, the k-nearest neighbors algorithm (k-NN) is a non-parametric method used for classification and regression. In both cases, the input consists of the k closest training examples in the feature space.The output depends on whether k-NN is used for classification or regression: . In k-NN classification, the output is a class membership. 4/11/2017 · KNN Algorithm is based on feature similarity: How closely out-of-sample features resemble our training set determines how we classify a given data point: Example of k-NN classification. The test sample (inside circle) should be classified either to the first …

The theory of fuzzy sets is introduced into the K-nearest neighbor technique to develop a fuzzy version of the algorithm. Three methods of assigning fuzzy memberships to the labeled samples are proposed, and experimental results and comparisons to the crisp version are presented. PDF This paper proposes a new k Nearest Neighbor (kNN) algorithm based on sparse learning, so as to overcome the drawbacks of the previous kNN algorithm, such as the fixed k value for each test

An Improved k-Nearest Neighbor Classification Using Genetic Algorithm N. Suguna1, and Dr. K. Thanushkodi2 Nearest Neighbor (KNN) algorithm called as Genetic KNN (GKNN), to overcome the limitations of traditional KNN. In traditional KNN algorithm, initially the distance between all The traditional KNN text classification algorithm used all training samples for classification, so it had a huge number of training samples and a high degree of calculation complexity, and it also didn’t reflect the different importance of different samples. In allusion to the problems mentioned above, an improved KNN text classification algorithm based on clustering center is proposed in

Implementing Your Own k-Nearest Neighbor Algorithm Using

KNN SlideShare. A method on how to improve the robustness of the KNN classifier is proposed. Firstly, the gradient descent attack method is used to attack the KNN algorithm. Secondly, add the adversarial samples generated by the gradient descent attack to the training set to train a new KNN classifier., 12/1/2012 · In pattern recognition, the K-Nearest Neighbor algorithm (KNN) is a method for classifying objects based on the closest training examples in the feature space. KNN is a type of instance-based learning, or lazy learning where the function is only approximated locally and all computation is deferred until classification..

kNN Machine Learning Algorithm Excel - YouTube

LECTURE 8 Nearest Neighbors. kNN: Computational Complexity Basic kNN algorithm stores all examples. Suppose we have n examples each of dimension d O(d) to compute distance to one example O(nd) to find one nearest neighbor O(knd) to find k closest examples examples Thus complexity is O(knd), 1 Distributed In-Memory Processing of All k Nearest Neighbor Queries Georgios Chatzimilioudis, Constantinos Costa, Demetrios Zeinalipour-Yazti, Member, IEEE, Wang-Chien Lee, Member, IEEE, and Evaggelia Pitoura, Member, IEEE Abstract—A wide spectrum of Internet-scale mobile applications, ranging from social networking, gaming and entertainment to emergency response and crisis ….

Implementing Your Own k-Nearest Neighbor Algorithm Using Python = Previous post. Next post => http likes 175. To get a feel for how classification works, we take a simple example of a classification algorithm - k-Nearest Neighbours (kNN) - and build it from scratch in Python 2. 7/13/2016 · This is an in-depth tutorial designed to introduce you to a simple, yet powerful classification algorithm called K-Nearest-Neighbors (KNN). We will go over the intuition and mathematical detail of the algorithm, apply it to a real-world dataset to see exactly how it works, and gain an intrinsic understanding of its inner-workings by writing it from scratch in code.

In this section we review the concepts like KNN, Genetic algorithm and heart disease. 2.1. K nearest neighbor classifier K nearest neighbor(KNN) is a simple algorithm, which stores all cases and classify new cases based on similarity measure.KNN algorithm also called as 1) case based reasoning 2) k nearest neighbor 3)example based reasoning 4) An Improved k-Nearest Neighbor Classification Using Genetic Algorithm N. Suguna1, and Dr. K. Thanushkodi2 Nearest Neighbor (KNN) algorithm called as Genetic KNN (GKNN), to overcome the limitations of traditional KNN. In traditional KNN algorithm, initially the distance between all

1/2/2017 · K-Nearest neighbor algorithm implement in R Programming from scratch In the introduction to k-nearest-neighbor algorithm article, we have learned the core concepts of the knn algorithm. Also learned about the applications using knn algorithm to solve the real world problems. In this post, we will be implementing K-Nearest Neighbor Algorithm on a dummy data set+ Read More k Nearest Neighbors algorithm (kNN) pdf book, 378.34 KB, 33 pages and we collected some download links, you can download this pdf book for free. k NN Algorithm. • 1 NN. • Predict the same value/class as the nearest instance in the training set. • k NN. • find the k closest training points (small xi − x0 DANN - Example. • Idea: DANN creates a neighborhood that is elongated along

An Improved k-Nearest Neighbor Classification Using Genetic Algorithm N. Suguna1, and Dr. K. Thanushkodi2 Nearest Neighbor (KNN) algorithm called as Genetic KNN (GKNN), to overcome the limitations of traditional KNN. In traditional KNN algorithm, initially the distance between all k Nearest Neighbors algorithm (kNN) László Kozma Lkozma@cis.hut.fi Helsinki University of Technology T-61.6020 Special Course in Computer and Information Science

12/21/2017 · How kNN algorithm works - Duration: 4:42. Thales Sehn Körting 501,022 views. 4:42. بالعربي Artificial Neural Networks (ANNs) Introduction + Step By Step Training Example - Duration: 25:56. mentioned above, an improved KNN text classification algorithm based on clustering center is proposed in this paper. Firstly, the given training sets are compressed and the samples near by the border are deleted, so the multi-peak effect of the training sample sets is eliminated. Secondly, the training sample sets of each category are

Firstly, as clipping-KNN, the paper applies an improved KNN sample points, image objects are obtained through image algorithm in the classification of image objects obtained segmentation. Secondly, original KNN, clipping-KNN by segmentation. k Nearest Neighbors algorithm (kNN) pdf book, 378.34 KB, 33 pages and we collected some download links, you can download this pdf book for free. k NN Algorithm. • 1 NN. • Predict the same value/class as the nearest instance in the training set. • k NN. • find the k closest training points (small xi − x0 DANN - Example. • Idea: DANN creates a neighborhood that is elongated along

The traditional KNN text classification algorithm used all training samples for classification, so it had a huge number of training samples and a high degree of calculation complexity, and it also didn’t reflect the different importance of different samples. In allusion to the problems mentioned above, an improved KNN text classification algorithm based on clustering center is proposed in In this section we review the concepts like KNN, Genetic algorithm and heart disease. 2.1. K nearest neighbor classifier K nearest neighbor(KNN) is a simple algorithm, which stores all cases and classify new cases based on similarity measure.KNN algorithm also called as 1) case based reasoning 2) k nearest neighbor 3)example based reasoning 4)

Firstly, as clipping-KNN, the paper applies an improved KNN sample points, image objects are obtained through image algorithm in the classification of image objects obtained segmentation. Secondly, original KNN, clipping-KNN by segmentation. K nearest neighbors is a simple algorithm that stores all available cases and classifies new cases based on a similarity measure (e.g., distance functions). KNN has been used in statistical estimation and pattern recognition already in the beginning of 1970’s as a non-parametric technique. Algorithm

This section will provide a brief background on the k-Nearest Neighbors algorithm that we will implement in this tutorial and the Abalone dataset to which we will apply it. k-Nearest Neighbors. The k-Nearest Neighbors algorithm or KNN for short is a very simple technique. The entire training dataset is stored. 5/22/2015 · A presentation on KNN Algorithm. Slideshare uses cookies to improve functionality and performance, and to provide you with relevant advertising. If you continue browsing the site, you agree to the use of cookies on this website.

7/13/2016 · This is an in-depth tutorial designed to introduce you to a simple, yet powerful classification algorithm called K-Nearest-Neighbors (KNN). We will go over the intuition and mathematical detail of the algorithm, apply it to a real-world dataset to see exactly how it works, and gain an intrinsic understanding of its inner-workings by writing it from scratch in code. How does the KNN algorithm work? In KNN, K is the number of nearest neighbors. The number of neighbors is the core deciding factor. K is generally an odd number if the number of classes is 2. When K=1, then the algorithm is known as the nearest neighbor algorithm. This is the simplest case. Suppose P1 is the point, for which label needs to predict.

12/21/2017 · How kNN algorithm works - Duration: 4:42. Thales Sehn Körting 501,022 views. 4:42. بالعربي Artificial Neural Networks (ANNs) Introduction + Step By Step Training Example - Duration: 25:56. K nearest neighbors is a simple algorithm that stores all available cases and classifies new cases based on a similarity measure (e.g., distance functions). KNN has been used in statistical estimation and pattern recognition already in the beginning of 1970’s as a non-parametric technique. Algorithm

An Improved KNN Text Classification Algorithm Based on

(PDF) Remote Sensing Image Classification Using kNN. k-Nearest Neighbor Algorithms for Classification and Prediction 1 1 k-Nearest Neighbor Classification The idea behind the k-Nearest Neighbor algorithm is to build a classification probability density functions for each class. In other words if we have a large, 5/22/2015 · A presentation on KNN Algorithm. Slideshare uses cookies to improve functionality and performance, and to provide you with relevant advertising. If you continue browsing the site, you agree to the use of cookies on this website..

Javier B ejar cs.upc.edu. The traditional KNN text classification algorithm used all training samples for classification, so it had a huge number of training samples and a high degree of calculation complexity, and it also didn’t reflect the different importance of different samples. In allusion to the problems mentioned above, an improved KNN text classification algorithm based on clustering center is proposed in, 3/26/2018 · KNN algorithm can also be used for regression problems. The only difference from the discussed methodology will be using averages of nearest neighbors rather than voting from nearest neighbors. KNN can be coded in a single line on R. I am yet to ….

LECTURE 8 Nearest Neighbors

KNN algorithm Research Papers Academia.edu. Abstract. As a simple, effective and nonparametric classification method, kNN algorithm is widely used in text classification. However, there is an obvious problem: when the density of training data is uneven it may decrease the precision of classification if we only consider the sequence of first k nearest neighbors but do not consider the differences of distances. https://en.wikipedia.org/wiki/KNN kNN algorithm depends on the distance function and the value of k nearest neighbor. Traditional kNN algorithm can select best value of k using cross-validation but there is unnecessary processing of the dataset for all possible values of k. Proposed kNN algorithm is an optimized form of traditional kNN by.

kNN algorithm depends on the distance function and the value of k nearest neighbor. Traditional kNN algorithm can select best value of k using cross-validation but there is unnecessary processing of the dataset for all possible values of k. Proposed kNN algorithm is an optimized form of traditional kNN by k-NN is often used in search applications where you are looking for “similar” items; that is, when your task is some form of “find items similar to this one”. You’d call this a k-NN search. The way you measure similarity is by creating a vector re...

We construct a special tree-based index structure and propose a Greedy Depth-first Search algorithm to provide efficient multi-keyword ranked search. The secure KNN algorithm is utilized to encrypt the index and query vectors, and meanwhile ensure accurate relevance score calculation between encrypted index and query vectors. K nearest neighbors is a simple algorithm that stores all available cases and classifies new cases based on a similarity measure (e.g., distance functions). KNN has been used in statistical estimation and pattern recognition already in the beginning of 1970’s as a non-parametric technique. Algorithm

kNN algorithm depends on the distance function and the value of k nearest neighbor. Traditional kNN algorithm can select best value of k using cross-validation but there is unnecessary processing of the dataset for all possible values of k. Proposed kNN algorithm is an optimized form of traditional kNN by 7/12/2018 · A kNN algorithm is an extreme form of instance-based methods because all training observations are retained as a part of the model. It is a competitive learning algorithm because it internally uses competition between model elements (data instances) to make a predictive decision.

A method on how to improve the robustness of the KNN classifier is proposed. Firstly, the gradient descent attack method is used to attack the KNN algorithm. Secondly, add the adversarial samples generated by the gradient descent attack to the training set to train a new KNN classifier. 1/24/2018 · Overview of one of the simplest algorithms used in machine learning the K-Nearest Neighbors (KNN) algorithm, a step by step implementation of KNN algorithm in Python in creating a trading strategy using data & classifying new data points based on a similarity measures.

kNN as a lazy (machine learning) algorithm g kNN is considered a lazy learning algorithm n Defers data processing until it receives a request to classify an unlabelled example n Replies to a request for information by combining its stored training data n Discards the constructed answer and any intermediate results g Other names for lazy algorithms kNN as a lazy (machine learning) algorithm g kNN is considered a lazy learning algorithm n Defers data processing until it receives a request to classify an unlabelled example n Replies to a request for information by combining its stored training data n Discards the constructed answer and any intermediate results g Other names for lazy algorithms

kNN algorithm depends on the distance function and the value of k nearest neighbor. Traditional kNN algorithm can select best value of k using cross-validation but there is unnecessary processing of the dataset for all possible values of k. Proposed kNN algorithm is an optimized form of traditional kNN by 3/26/2018 · KNN algorithm can also be used for regression problems. The only difference from the discussed methodology will be using averages of nearest neighbors rather than voting from nearest neighbors. KNN can be coded in a single line on R. I am yet to …

Introduction to k Nearest Neighbour Classi cation and Condensed Nearest Neighbour Data Reduction Oliver Sutton February, 2012 Contents the data set later. This is achieved by removing it from the training set, and running the kNN algorithm to predict a class for it. If this class matches, then the point is correct, otherwise An Improved k-Nearest Neighbor Classification Using Genetic Algorithm N. Suguna1, and Dr. K. Thanushkodi2 Nearest Neighbor (KNN) algorithm called as Genetic KNN (GKNN), to overcome the limitations of traditional KNN. In traditional KNN algorithm, initially the distance between all

In pattern recognition, the k-nearest neighbors algorithm (k-NN) is a non-parametric method used for classification and regression. In both cases, the input consists of the k closest training examples in the feature space.The output depends on whether k-NN is used for classification or regression: . In k-NN classification, the output is a class membership. In pattern recognition, the k-nearest neighbors algorithm (k-NN) is a non-parametric method used for classification and regression. In both cases, the input consists of the k closest training examples in the feature space.The output depends on whether k-NN is used for classification or regression: . In k-NN classification, the output is a class membership.

The parameters of the algorithm are the number k of neighbours and the procedure for combining the predictions of the k examples The value of k has to be adjusted (crossvalidation) We can over t (k too low) We can under t (k too high) Javier B ejar (LSI - FIB) K-nearest neighbours Term 2012/2013 10 / 23. 7/25/2016 · Figure 1 plots the distribution of X 0 values in absence of missingness and after imputation with k = 1, 3 or 10 neighbors in an additional experiment of 100 imputation runs in samples of size n = 400, MCAR = 30 % in the context of the plain framework with the kNN algorithm.

k-NN is often used in search applications where you are looking for “similar” items; that is, when your task is some form of “find items similar to this one”. You’d call this a k-NN search. The way you measure similarity is by creating a vector re... packages in R. The caret package is used to form the kNN algorithm. kNN modeling and prediction is a simple algo-rithm that regresses over k nearest neighbour variables and uses the collected data to analyze the obtained data. The pander package is used to represent the analyzed data in the form of tables for easy recognition and readability. Thus

12/21/2017 · How kNN algorithm works - Duration: 4:42. Thales Sehn Körting 501,022 views. 4:42. بالعربي Artificial Neural Networks (ANNs) Introduction + Step By Step Training Example - Duration: 25:56. parameter in the k-nearest neighbor (KNN) algorithm, the solution depending on the idea of ensemble learning, in which a weak KNN classifier is used each time with a different K, starting from one to the square root of the size of the training set. The results of the weak classifiers are combined using the weighted sum rule.